The pool of talented people interested in pursuing a graduate business degree has never been more global and diverse. In order to fairly evaluate applicants from such a range of educational and professional backgrounds, it’s very helpful for business school admission committees to have GMAT™ exam scores as a means of comparing applicants’ skills and potential classroom success on the same scale. For decades, the world’s top business schools have trusted the GMAT exam because it’s specifically validated to predict performance in the first year of an MBA program.

Because the GMAT’s score range has such a long and established history, schools and applicants alike want to be able to do a GRE to GMAT conversion. In other words, they want to be able to directly compare the minority of applicants who apply with a GRE score to the majority of applicants who apply with a GMAT score and draw conclusions about their relative levels of preparedness for business school success. But is there really a valid way to do that?

GRE to GMAT conversion: Can you convert your GRE score to a GMAT score?

Bottom line: You can’t convert a GRE score to a GMAT score, and vice versa. The GRE and GMAT exams are different tests, measuring different content, and no conversion table or conversion tool can ever make them equivalent. The best way to know what your GMAT score would be is to prepare for and take the GMAT exam.

Is the content of the two exams really that different? Yes, they are.

The GMAT exam is the only admissions test designed specifically to be used for admissions to graduate business programs. It measures the higher order skills most relevant to succeed in a graduate business program. Its four sections target specific skills that are highly relevant to the business career path you aspire to.

The GRE, on the other hand, is (by design and name) a general test and its three test sections are not related to any specific field of study. Only three percent of test takers use their scores for admissions to MBA or specialized master’s in business programs.*

Debunking the GRE Comparison Tool for Business Schools and other GMAT conversion tables

For more than a decade, the Educational Testing Service (ETS), the makers of the GRE General Test, have hosted on their website and promoted to business schools a tool that they claim can predict GMAT scores based on GRE scores.

The tool uses three linear regression models for predicting GMAT Total, GMAT Quant, and GMAT Verbal scores, and claim “the predicted score range is approximately +/- 50 points for the Total GMAT score and +/- 6 points on the Verbal and Quantitative scores.”

There are several critical flaws in research design and interpretation—as well as misleading statements from ETS—that ultimately make this tool completely baseless and a disservice to test takers and business schools alike.

Data-related issues

The tool’s linear regression models were built based on 472 test takers who took both GRE and GMAT within a one-year period between August 2011 and December 2012.

This data is outdated, and its integrity not verified. The GMAT testing population from ten years ago is completely different from today’s more globalized testing pool, and there are substantial differences in the score distributions for both the Quant and Verbal sections, so this outdated data isn’t relevant anymore. In addition, all the score data was self-reported from participants, and there is no evidence of data integrity. ETS admits this issue openly.

There are several sampling issues. There is no evidence of a representative data sampling process. ETS provides no information about the character of the sampled cases and there’s no basic descriptive statistics reported about GRE vs. GMAT score distributions, like mean, standard deviation, skewness, and kurtosis. The complete omission of such key information itself is extremely unusual and indicates ETS’s lack of confidence about the representativeness of sample data.

In fact, there is evidence of unrepresentative samples. ETS unintentionally revealed their observation of standard deviation (SD) of GMAT scores in their equation for computing prediction errors, and the SD for GMAT Total scores used in their prediction model development (with SD=136.8) were substantially different from the actual GMAT population from years 2018-2020 (SD=114.7).

Different levels of test preparation across participants and their motivations were not controlled. According to what we’ve learned from candidates and test prep organizations, candidates do not prepare for both the GMAT and GRE exams equally. Candidates usually try GMAT exam first, and if they find their scores lower than expected, they sometimes decide to prepare for GRE (or vice versa). Given this typical pattern of candidates who ended up taking both GMAT and GRE, there is little reason to believe that GMAT and GRE scores equality reflect the candidates’ preparedness.

In addition, ETS did not explain how they controlled for motivational issues across participants, specifically the widespread strategy of repeating testing, wherein an examinee’s motivation in their first-time testing is different, and they don’t necessarily try their best for the highest score but often just scan through the test contents for the “experience” itself.

ETS admits these issues openly as well.

Methodological and procedural issues

The uses of linear regression models must have resulted in systematic prediction errors (i.e., score bias) and the difference in measurement errors between GRE and GMAT was completely overlooked.

Correlation does not guarantee linearity. Given the substantially different score distribution shapes between GRE Quant scores (which is close to a normal, bell shape curve) and GMAT Quant scores (which is very negatively skewed) even if the rank order of GRE scores were perfectly preserved in GMAT scores—which is not true at all—their relationship is expected to be non-linear especially for the top scorers. As a consequence, the estimated prediction models are expected to introduce substantial prediction bias, especially toward the top end of the score range.

Fundamental differences in test design were totally ignored. GRE’s conditional standard error of measurement (CSEM) across score areas is expected to be significantly different from GMAT’s due to their difference in the test design—Multistage Testing (MST) for GRE vs. Computerized Adaptive Testing (CAT) for GMAT. With GMAT’s CAT algorithm, which very tightly controls the CSEM across all reported score range, the CSEM for GMAT Total is consistently around 30~40 even in the higher score area (>700). GRE, however, with just two stages in their MST design (i.e., it is only 50% adaptive at best), substantial fluctuations in CSEM is expected, especially in the higher score area.

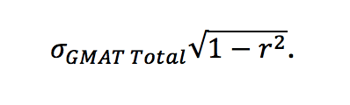

The prediction interval reported by ETS is an extreme underestimation of the truth. ETS used the following basic formula to compute the standard error (SE) of prediction: Computing SE of prediction using this equation, however, is appropriate only when several key assumptions are met, such as linearity, independent errors, a normal distribution of errors, and equal error variances. Given all problems already described, it’s evident that all of the critical assumptions for this equation were serious violated.

Computing SE of prediction using this equation, however, is appropriate only when several key assumptions are met, such as linearity, independent errors, a normal distribution of errors, and equal error variances. Given all problems already described, it’s evident that all of the critical assumptions for this equation were serious violated.

On top of that, ETS also ignored the CSEM of GRE scores in the prediction error computation as if the GRE were error-free measure. In fact, GRE scores are likely to have relatively larger CSEM, especially at the higher score range and, as a result, the predicted GMAT Total score are at the least not very useful for any critical decision making (if the prediction is not already completely meaningless due to all the data and model related issues pointed out so far) with the empirical SE of prediction that could be 2~3 times larger than what ETS estimated.

ETS themselves did not use their own “predicted GMAT Q and V scores” for predicting GMAT Total scores but introduced another different multiple regression model. This would seem to suggest that ETS knows their own predicted GMAT section scores were not effective and appropriate to predict GMAT Total scores.

Misleading communications

On ETS’s website, they stated, as a footnote, that “the predicted score range is approximately +/- 50 points for the total GMAT score…”

This statement is false. The “prediction interval” should always be presented with its confidence level, such as 68 percent (=1SE), 95 percent (=2SE), or 99 percent (=3SE) confidence level. Their statement is deceiving the public as if they were reporting 100 percent confidence (“predicted score range” means the difference between the minimum possible predicted score and the maximum possible predicted score) while their reported values were actually only for 68 percent confidence interval.

Also, the SE that ETS computed was 54.8, and they rounded it down to 50. More importantly, given the serious underestimation of their prediction SE, the actual SE is expected to be much larger than “50 points” when it appropriately accounts for GRE’s SEM as well as predictor’s relative location to its mean.

For example, in the GMAT Total score area like 650 or above, which is what many schools are interested in, there is no evidence that the actual prediction error with 99% confidence interval to be any smaller than ± 200 points. It means that the true GMAT scores for individual candidates whose predicted GMAT Total scores were 650 could be anywhere between 450 and 800 99 percent of time, if not worse, even in the best-case scenario where ETS’s regression model somehow did not cause any prediction biases despite the data-related issues pointed out above.

Why take the GMAT exam? It’s purpose-built for business school

Since 1953, the GMAT exam has been the only admissions test designed specifically to be used for admissions to graduate business programs. And because the GMAT is designed specifically for business, the time you devote to prep is an investment not only earning your best score, but also in sharpening your skills for business school and your career. The world’s leading business schools trust the GMAT exam because it’s specifically validated to predict performance in the first year of an MBA program, and your GMAT score signals to them that you’re serious about pursuing a graduate business degree from their school.

If getting into your top choice business school is your goal, earning your best GMAT score is one of the best ways to demonstrate that you’d make for an excellent addition to their next incoming class.

*ETS (2019). A Snapshot of the Individuals Who Took the GRE General Test July 2014-June 2019. Table 1.15: Graduate Degree Objective. https://www.ets.org/s/gre/pdf/snapshot_test_taker_data_2019.pdf