Graduate schools of business use a variety of admissions criteria to evaluate prospective students for enrollment. Among them, the Graduate Management Admission Test™ (GMAT™) score is one of few data points by which all applicants’ skills and potential classroom success can be directly compared on the same scale.

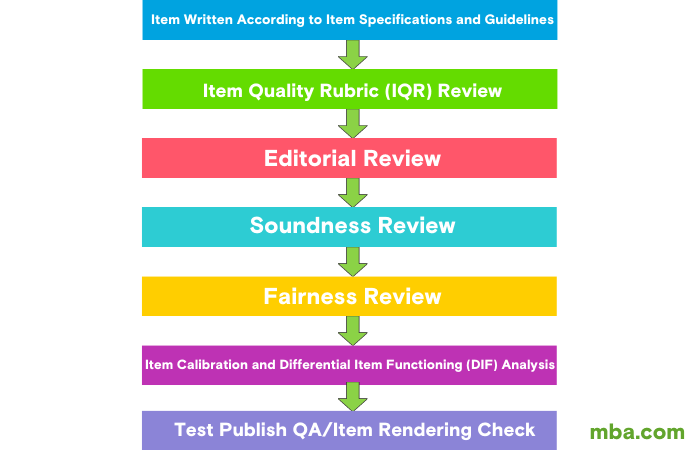

As the developer of the GMAT exam, the Graduate Management Admission Council™ (GMAC™) takes the exam’s role as a standardized metric for the admissions decision-making process deeply to heart. GMAC takes seriously its mission to ensure the quality and fairness of the GMAT exam for all test takers across the globe. It does so by subjecting every potential test question (a.k.a. “item”) to a rigorous seven-step development and review process before it can be used in operational exams.

How are GMAT exam questions developed and tested for fairness?

GMAT test items are first written by qualified subject matter experts (SMEs) according to item specifications and guidelines (for example, item type, content area, cognitive type, and cognitive complexity/load). They are then checked to ensure they were developed to measure what they were intended to measure during what’s called the item quality rubric (IQR) review.

Next, all items undergo an editorial review and a soundness review to eliminate any technical flaws in both language and logic. After that, an international panel of test fairness—whose members represent the GMAT testing population in gender, race/ethnicity, country, language, and culture—reviews every test item to screen out any potentially harmful elements that may cause cultural sensitivity and bias issues.

Following these rigorous content review processes, a pre-test analysis procedure begins.

Using the empirical response data collected from the pre-test stage, the test development team statistically calibrates all test items using modern test development framework (a.k.a., item response theory or IRT) to evaluate their difficulty, discrimination power, latency, and other characteristics. The behaviors of the test items across subpopulations (including but not limited to gender, race/ethnicity, age, country, and language) are then inspected during the differential item functioning (DIF) analysis.

Any items that statistically show differential functioning across population subgroups are discarded even if there are no issues with the content. Only items that successfully pass all content and statistical review processes will be considered for inclusion in the operational GMAT exam. Once test items actually are selected to be part of the test package, they will be checked one more time during the test publish QA. On average, a cycle of GMAT item development takes about 12 months.

The GMAT exam minimizes bias and maximizes fairness in admissions

This illustration of the GMAT exam item development process demonstrates GMAC’s dedication to creating and operating a GMAT exam that is fair and bias-free across the entire GMAT test-taking population. It also is indicative of the massive investment of time, effort, innovation, and specialized expertise required to develop a high-quality measurement tool such as the GMAT exam, a tool that has become an industry standard for the critical decision-making process for graduate admissions.

Generally, individual schools and programs are not well-equipped to create and run their own admission tests capable of meeting all important educational standards. That is why a group of forward-thinking graduate management schools gathered in 1953 and worked to develop and operate the GMAT exam as an admissions criterion for their programs. Ever since, GMAC—on behalf of its member schools, GMAT-using schools, and graduate management education (GME) communities in 110 countries—has overseen the continuous evolution of the GMAT exam to ensure the relevancy of what the exam measures, the effectiveness and appropriateness of test items, and test measurement standards that are socially and educationally responsible in every way possible.

The GMAT exam is a key element of the holistic admissions approach

Although GMAT exam scores serve a vital role in the admissions process by providing objectively quantifiable information about candidates’ ability and potential for success, GMAC advises against using the GMAT exam as the sole admissions criterion.

In fact, GMAC has always been a strong advocate of the holistic admissions approach. This approach incorporates both quantitative data and qualitative data points, including recommendation letters, essays, interviews, work experiences, and other indicators of social and emotional intelligence and leadership. Such an approach helps admissions professionals discover the best qualified talent among applicants who can most benefit from GME while also contributing to building strong classrooms/peer groups for the school/program.

In the holistic admission approach, the GMAT exam score acts as a solid anchor that makes sure the decision-making process does not become adrift in a sea of non-standardized data points and helps the process remain objective and fair for all candidates.

The problem with overreliance on undergraduate GPAs

The undergraduate GPA (UGPA) is another meaningful indicator that captures candidates’ academic achievement from their college years; it’s often viewed as the most important predictor for candidates’ success in GME. In general, it is not unusual to observe a significant correlation between the UGPA and GPA from graduate programs (GGPA).

Unlike how it may appear to some, however, UGPA is neither a standardized nor a necessarily objective measure of academic success. Colleges use different metrics and systems for their UGPA calculations, and more importantly, often show grade distributions that vary substantially from each other. Even within the same college, grading criteria may differ across classrooms and raters.

As a result, UGPA does not offer data points with which candidates can be evaluated and compared consistently. In fact, the hundreds of predictive validity studies that GMAC has conducted for various schools and programs repeatedly find that UGPA often shows large fluctuations in its correlation with GGPA across years, even within the same program or school.

In other words, UGPA could be a useful predictor of GGPA for a given year but might be a less effective or even misleading predictor the next year. The GMAT Total Score, on the other hand, tends to show a much more stable and consistent correlation with GGPA over time.

Recent studies also reveal that the subjective nature of the grading system (without explicitly defined and strictly applied rubrics) apparently invites biases in grades, and they are often biased against minority groups such as those from African American families and immigrant families. In fact, in several of GMAC’s validity studies and meta-analyses, we found the UGPA of those minority groups had a much lower correlation with GGPA, again, whereas the GMAT Total Score shows much more consistent predictive validity across key subpopulations by race, ethnicity, gender, and age.

The GMAT exam: a standardized metric for the admissions decision-making

While we recognize that biases in grading is a serious issue that all members of the educational community need to work together to address, there is accumulated scientific evidence that GMAT exam scores greatly mitigate potential biases in the admissions decision-making process against minority groups when used together with other criteria including UGPA.

As it has been since its beginning, GMAC is firmly committed to its mission to ensure the quality and fairness of the GMAT exam for all test takers across the globe and help aid business school admissions committees’ decision making as they build their incoming classes of future business leaders.